- R-PAS:

- Inter-rater reliability: overall Kappas are .85, and for individual responses (Meyer, Viglione and Mihura, 2017)

- R-PAS reliability data are comparable to or better than the well-established interrater reliability for the CS

- Exner presented test-retest reliability for 7 ratios and percentages, 6 had test-restest values over .75 for 1 year, while a measure of stress and distress was sw lower, expectably (,64)

- In Meyer, etal the median exact agreement (ICC) for the 60 different variables included on the R-PAS profile pages was .92

- the reliability found among most R-PAS variables in current use is comparable to that of simple physical measurements in medicine and much better than judgments such as supervisors’ evaluation of job performance or surgeons’ diagnoses of breast abnormalities

- Based on the meta-analyses of Mihura et al. (2013)(not to mention several of the R-PAS publications cited above), … the estimated mean error rate for most R-PAS variables when using externally assessed validity criteria can be estimated as roughly 35% (Erard 2012), which falls squarely within the typical range of variable-to-criterion error rates found for the individual scales and scores used in personality assessment (Erard and Viglione, 2014)

- Meyer, Finn et. al. established that these are superior to many and equivalent to many medical tests and procedures

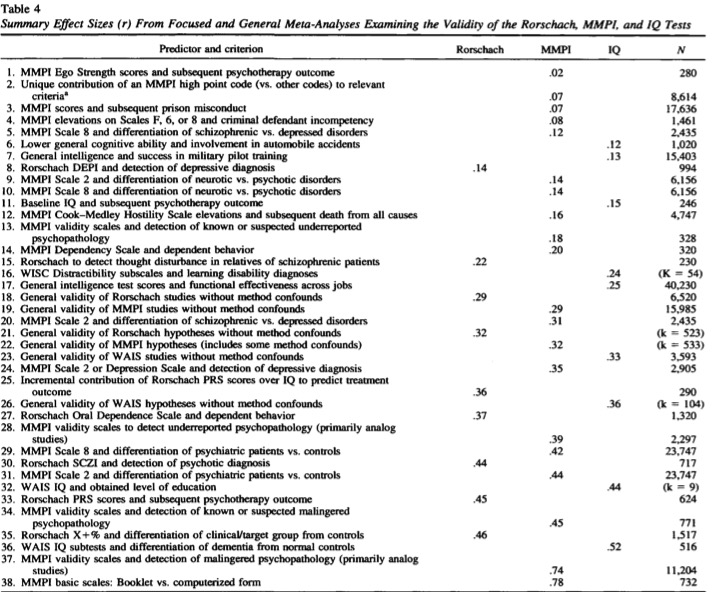

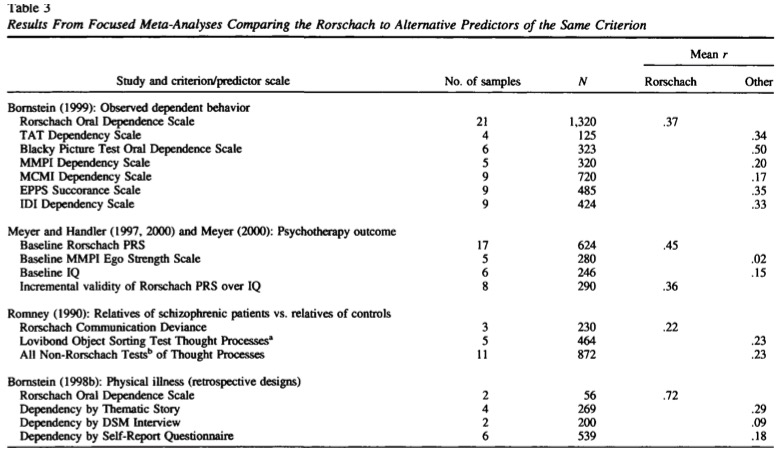

- (Erard & Viglione) The R-PAS manual, the Mihura et al. (2013) meta-analyses, and several other meta-analyses (e.g., Bornstein1996; Diener et al.2011; Graceffo et al. 2014; Kiss et al.2012; Walsh et al.2012) provide ample data concerning the validity of individual R-PAS variables.

- From such data Young (2014)concluded,“With the meta-analysis that she [Mihura] has undertaken, the reliability and validity for the use of the R-PAS as an adjunct to tests such as the MMPI-2 in the forensic disability and related [context] no longer appears an issue (Erard and Viglione, 2014)

- Inter-rater reliability: overall Kappas are .85, and for individual responses (Meyer, Viglione and Mihura, 2017)

- Validity: content validity, criterion-related validity (concurrent and predictive validity), construct validity, incremental validity

- Correlations between self-report tests, semi-structured interviews, and informant reports for PD’s are moderate to inconsistent (Ganellen, 2007). The agreement between self-ratings and informant ratings of features of PD are “modest at best”. Ganelled (2007):

“CS variables can identify and describe many of the enduring patterns of inner experience, interpersonal dysfunction, and behaviors associated with … DSM–IV PD diagnostic criteria. These include characteristic ways of perceiving oneself and others; interpreting events in an accurate as opposed to a distorted manner; effectiveness in managing emotional responses and affective intensity; capacity to relate to other people in an appropriate, prosocial manner; and …[capacity to exert] control over one’s impulses… in combination with information that may be obtained from interviews, self-report, other report, and clinician–therapist interaction”

Meyer and Archer (2001): There is no reason for the Rorschach to be singled out for particular criticism or specific praise. It produces reasonable validity, roughly on par with other commonly used tests.

The Rorschach assesses implicit or underlying personality characteristics… [this] recognizes the most consistent finding in the literature, which is the general lack of correlation between Rorschach scores and similarly named self-report scales, because the latter measure self-awareness/explicit personality characteristics.